Getting started with

Evaluating Impact

What is impact?

Impact is the effect that one action or event has on another action or event. Impact can be positive, negative or neutral. For example, research by John Hattie shows that good-quality feedback can have a positive impact on student learning, but retention (keeping students back a year) can have a negative impact on student achievement. In education, people are increasingly asking about the impact of teaching on learning, or the impact of a new resource or strategy on student outcomes. Teachers want to know that both new initiatives and current practices are worthwhile.

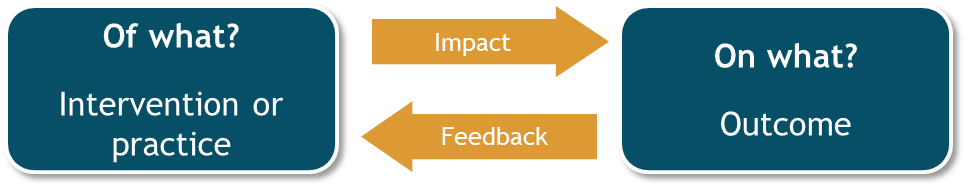

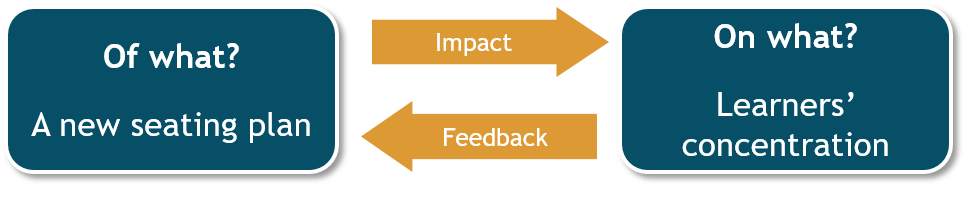

When talking about impact, we need to be clear about two things.

1) Impact of what?

2) Impact on what?

This is important when reading other people’s research and also when we do our own enquiries (see the section on research evidence for more information). Here are two examples for each of these two questions.

To be able to evaluate impact, we need to know where we are starting from. For this we need baseline data. From this starting point, we can evaluate learners’ progress and the impact of our teaching or a specific intervention (see the diagram above for examples). It is also useful as evidence to justify why we want to evaluate a particular area of teaching practice. For example, we may find that baseline data from student interviews shows that they struggle to explain the method of learning they find most effective, so this is an area of practice you are interested in developing.

Baseline data can take many forms. It might be quantitative (number-based), such as:

- previous exam results;

- test scores at the start of a topic or course;

- previous attendance data;

- how often a student contributes in class; or

- the number of words read out loud in a minute.

It might also be qualitative (word-based or visual), such as:

- interview data;

- notes from a lesson observation; or

- examples of student work.

The data you use as your starting point will depend on what you want to evaluate the impact of and on. In education, we look for practices that have a positive impact on learner outcomes in some way.

This might include:

- learning (a change in how learners think about a topic, and how they gain skills and knowledge and develop values);

- attainment (the level learners reach in an assessment); and

- achievement (the progress a learner makes in relation to where they started from).

Evaluating non-academic evidence, such as attendance data, might also be a good indicator of academic issues. For instance, if learners are struggling in a particular subject or year group, attendance might fall in that area.

You may also evaluate the impact of your pastoral or extra-curricular work, perhaps looking at outcomes such as improved school attendance for girls or improved interpersonal skills among students, which increases their confidence. You might even look at the effect of an academic intervention on a non-academic issue. For instance, you might want to look at whether a new homework policy has an effect on students’ wellbeing.

We can evaluate impact over a range of timescales. For example, you can evaluate changes in learning immediately after a lesson, but also after a month or even longer, to see if new ideas have become firmly established. Sometimes we need to evaluate quickly, because we want the result to help our short-term planning. But some initiatives can only be fully evaluated over the long term. For example, a new classroom activity might get good results at first because it is different and students are more likely to get involved. But over time, when learners have got used to the idea, there might be less evidence of a positive impact. Or, the impact of a joint pastoral and academic programme designed to get learners more involved might show few results in the short term, with a positive impact only evident after a year or more.

In the video below, Dr Sue Brindley says a big challenge teachers face is making sure what they evaluate is specific, small, focused and doable. What area of your own practice do you want to investigate? Is it specific, small, focused and doable?

Paul spent a lot of time giving written feedback and felt his students were not using it to help improve their work. He was often writing the same feedback to the same students. He decided to get his IGCSE classes to respond to his feedback. He built in time during his lessons for his students to develop their work using his feedback comments. The first impact he observed was to do with the questions his students were asking during this time. These questions showed his students were thinking carefully about the feedback and how best to respond to it. Over time, he could see his feedback comments were becoming more varied for each student.

How does evaluating impact link to Assessment for Learning (AfL)?

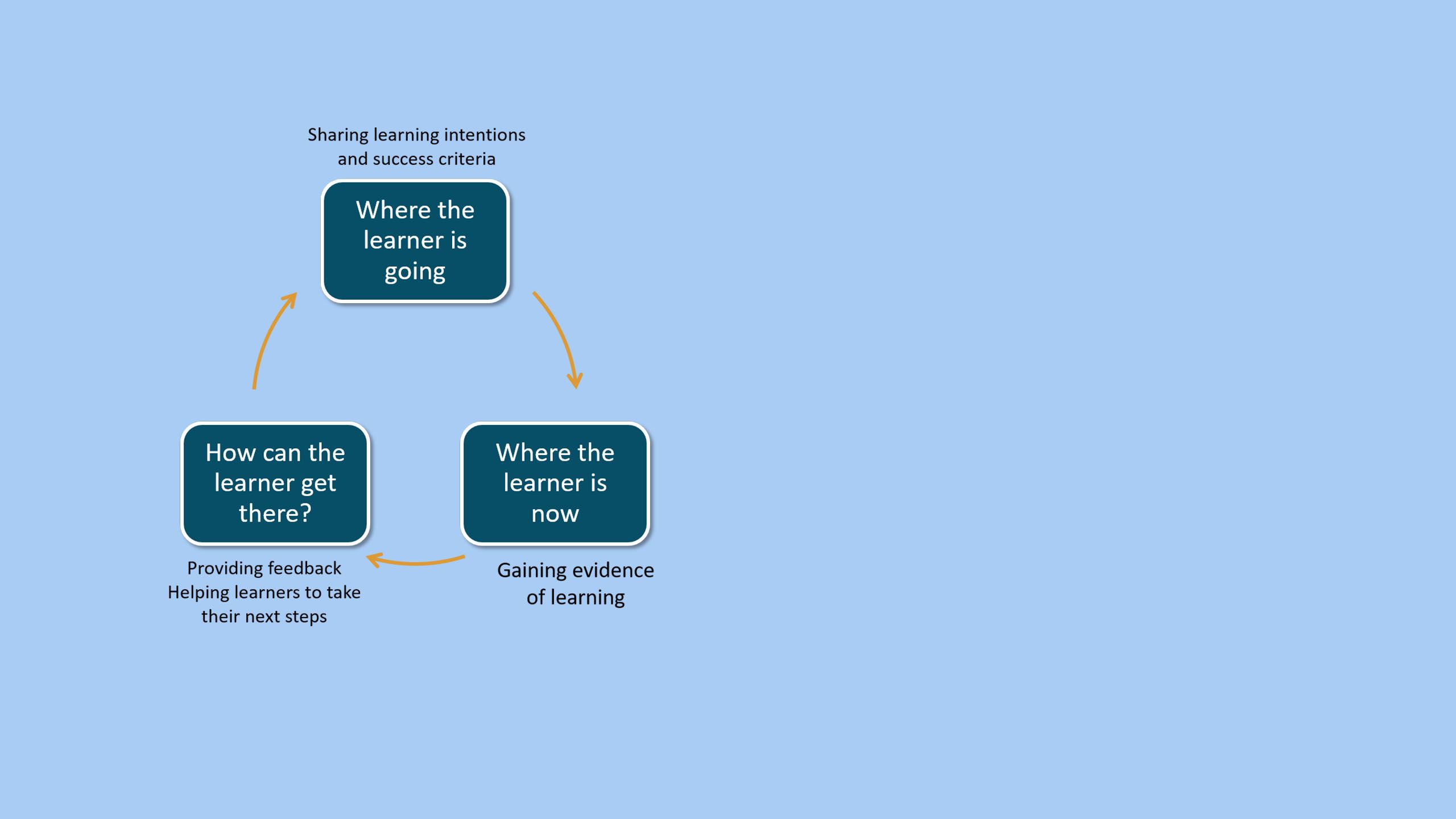

Evaluating impact is not new to most teachers. It’s simply a more systematic and thoughtful approach to their everyday reflective practice. Any teacher who uses AfL effectively is already evaluating impact (see diagram on the left).

As teachers, we never assume that our teaching automatically produces learning. An effective teacher will have a good sense of where they want the learner to get to (their progress), based on the aims of the lesson and the learner’s knowledge (Where the learner is going). They constantly ask themselves ‘What have individuals and groups learned?’ and ‘How do I know?’. They do this through using AfL techniques, such as effective questioning during a lesson (Where the learner is now). They then adjust their teaching to respond to what they find out about how students learn (How can the learner get there?). For example, they may realise through questioning that students haven’t understood a particular point, so they explain it in a different way and check again. In an informal way, they are investigating the impact of their teaching on students’ learning.

"What have my students learned? How do I know?"

After the lesson, effective teachers carry out a short evaluation. For instance, you might think about how far you achieved the aims of the lesson. You might base this on evidence from the lesson, such as students’ spoken responses or written work. You then think about anything you need to do differently next lesson, or what you would change if you ran the activities again. You will think about whether any students need more help to understand what was covered before you move on. This is a quick and informal method of evaluating the impact of teaching on learning.

If you are not already using AfL in this way, this is your first step to evaluating impact. See ‘Getting started with assessment for learning’ for more information.

It is important to remember that there are no easy routes to evaluating impact. Schools are complex and there are many interlinked factors at work. It is normally hard to say for certain that one action has caused a particular outcome. However, this should not stop us trying to evaluate our impact as teachers and leaders.

This guide is an introduction to help you critically evaluate other people’s evaluations, and also to run your own evaluation.

In this guide we will also consider the research behind evaluating impact, the benefits of evaluating impact and how to carry out an impact evaluation. We will highlight some common misconceptions about evaluating impact. We will also introduce key techniques and issues, and suggest other resources you can use to develop your understanding and practice in this area. At the end, there is a glossary of key words and phrases and some suggestions about what you could do next.

What are the benefits of evaluating impact?

There are many benefits of evaluating impact for teachers, learners and schools.

Benefits for teachers

- Understanding learners: the most important thing for any teacher is to understand your learners. Evaluating the impact of your practice on their learning is one way to better understand them and their learning needs. By doing this, you will be more likely to know what works best and so be better prepared to support them.

- Empowerment: teachers are informed professionals who feel able to make a positive difference to their learners.

- Motivation: you continually develop your practice through reading and investigation, which can be interesting and satisfying and may help increase your job satisfaction.

- Expertise: research helps you develop expertise about the issues investigated. For instance, it helps you to know what works and what doesn’t in a particular setting. You also develop a greater understanding and experience of ways of collecting evidence for different purposes.

- Wider skills: evaluating impact helps you to be flexible and ready to respond thoughtfully to new ideas. It also helps you to develop your skills of critical engagement (informed questioning of the literature) and practice.

Benefits for learners

- Access to effective teaching: learners are taught by informed teachers who are always striving to get the best out of them. They have access to teachers who are able to help them learn more and do so effectively, with a greater range of useful activities and strategies.

- Self-reflection: many studies ask for views from the learners (‘student voice’). This helps them to develop their own reflective skills.

Benefits for schools

- Teachers working together to share ideas: schools can create communities of practice made up of reflective teachers who feel responsible for their own professional development and teaching practice. They can draw on others’ practice to see what might work in their school (and what doesn’t), continually reviewing their professional development opportunities and learning from others.

- Improved teaching and learning across the whole school: teachers can learn from each other, and can try out initiatives to see if they have a positive impact in their school. They will have a better idea of what works and what doesn’t. This is likely to encourage an improvement throughout the whole of the school.

- Staff development: schools benefit from a professional, motivated and involved team of teachers.

- Effective allocation of resources: evaluating impact can help schools decide whether it is worth funding an initiative more widely. For example, a school might want to put electronic whiteboards in all its classrooms but instead they can have a small trial first, and evaluate the impact.

"If the pupils made gains, the school was richer in knowledge about what worked; if the pupils did not make gains, the school was richer in knowledge about what did not work and what to avoid in the future."

In the video below, Dr Sue Brindley introduces the term 'sticky knowledge'. How could you overcome the problem of 'sticky knowledge' in your school?

What does the research evidence tell us about evaluating impact?

One popular way of evaluating practice in schools is looking at teachers’ own studies. There are a number of different ways of doing this, including practitioner enquiry, lesson study (see ‘Getting started with peer observation’) and collaborative professional enquiry. Each approach involves individuals or groups of teachers reflecting in a careful and disciplined way on their own practice in order to improve learner outcomes. Lawrence Stenhouse, working out of England in the 1970s, has been a major influence on teacher research internationally. He encouraged teachers to carry out research into their own practice. He hoped that findings from many small-scale studies would be combined to influence policy and practice.

"It is teachers who, in the end, will change the world of the school by understanding it."

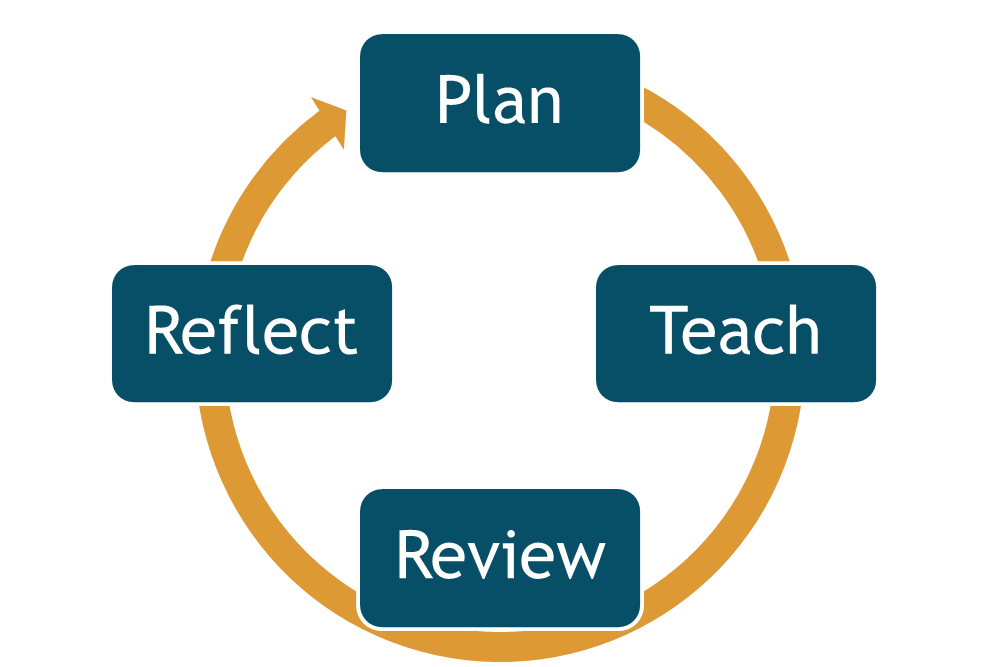

A basic cycle of practitioner enquiry into evaluating practice involves the following four stages (see the diagram below also).

- Plan: think about the area of teaching practice to investigate

- Teach: try out an intervention

- Review: consider the methods for collecting data, the sample of students and how to analyse the data

- Reflect: think about how to establish findings and the next steps. It is important that the findings from this enquiry help future practice, and that you share them with a wider group.

Each approach to practitioner enquiry mentioned above also has distinctive features, and you can find more information about these from the reading list in the handout at the end of this guide.

Practitioner enquiry cycle

There is a wide range of research in education, from small-scale practitioner enquiry at one end, to large-scale international studies at the other. Much of that research aims, in some way, to evaluate the impact of current practice or a particular innovation on learner outcomes. Many small-scale teacher enquiries have been carried out that have been helpful to individual teachers and their immediate context, but often the findings have not been collected to the extent Stenhouse hoped. Research is always more powerful when we also look at the work of other people doing similar things. With this in mind, it would be worth talking to your colleagues to compare and share your views on evaluating impact.

There is an important role for projects which bring together findings from many different research studies and compare them to evaluate the overall impact of different practices. These projects can be systematic reviews of research or meta-analyses. Systematic reviews may include studies that have produced qualitative as well as quantitative data, but because meta-analysis uses statistical processing, it can only use quantitative findings.

For example, John Hattie carried out a meta-analysis of over 800 meta-analyses, drawing on over 50,000 published research studies, all of which had generated effect sizes.

Carrying out a meta-analysis is complex and it isn’t a perfect science. There are issues about what research actually gets published and so is used in a meta-analysis. For example, researchers have noticed that projects which produce statistically significant results are more likely to get published. Robert Rosenthal from Harvard University came up with the term ‘file-drawer problem’ in 1979 to describe this. Researchers were more likely to file away in a drawer findings which didn’t support their theories or which were not statistically significant. This means there may be more unpublished research that tells us certain interventions actually have less of an impact, compared with the findings that have been made public. And because the findings aren’t shared, we don’t know this. This could give us an overly positive picture of what has an impact in education. You can learn more about these issues from the reading list.

With this in mind, when using reviews of evidence it is always important to ask these key questions.

- On what criteria were the studies selected?

- What sorts of studies were excluded as a result?

- When were the selected studies published?

- Who funded the research?

- In which countries were the studies carried out?

- What type of schools or educational settings were the studies carried out in? What age were the children?

- What is the impact of and on?

This will help you consider how relevant the findings from these reviews are to your own school. No study is going to cover everything. But these questions help us to understand how we can apply the study to our own context, and also to understand the limits of that study.

Remember that each educational setting is different. Just because something has worked in certain classrooms does not necessarily mean it will transfer to yours in the same way. Pawson and Tilley argue that education is complex and they say that what works in one school or with one group of learners may not work so well for others. Therefore, we shouldn’t be asking ourselves big sweeping questions like ‘what works in education?’ but instead we need to ask ‘what works for whom, when, and under what circumstances?’

“Our baseline argument throughout has been not that programs are ‘things’ that may (or may not) ‘work’; rather they contain certain ideas which work for certain subjects in certain situations.”

However, don’t dismiss an article just because its context is different from yours, either. There may be something discussed by the author that is particularly relevant to you, your learners or your school.

Effect sizes are numbers that show the size of any difference between two data sets, taking into account the spread of the data. For example, you could compare the test outcomes for a class before and after you taught them a particular topic. The studies Hattie included in his meta-analysis gave effect sizes for the impact of various educational practices on learner achievement. Hattie then combined these to give an overall result for each practice. For example, feedback had an overall effect size of 0.73, making it the 10th most effective out of all the interventions evaluated. The results of the meta-analysis enabled Hattie “to build an explanatory story about the influences on student learning” in his book Visible Learning (2009, p22).

Common misconceptions

There are a number of common misconceptions around understanding and evaluating impact in education.

Numerical evidence of impact is best.

Numerical evidence, such as effect size, can be very useful, but data is only as good as its methods of collection, analysis, and the conclusions reached. Numerical results from a test, where the test is not a good measure of learning, will not be helpful, even if the numbers look impressive. The best data for a study might be quantitative, qualitative, or a mixture of both.

Correlation equals causation.

Just because there is an upward trend in two sets of data (or one has gone up when the other has gone down) doesn’t mean that one has necessarily caused the other. They may not be related at all, or another factor may have caused them both. For example, the A-level results of the maths department may have risen over the past three years, while the number of girls taking maths at A-level has also increased. We can’t assume that the increase in girls has caused the increase in attainment. Other factors, such as a new feedback policy or more one-to-one conversations between students and their teachers about progress, might have been the cause. Philosopher David Hume wrote about causation and considered in detail about how we think about cause and effect, believing that we should never assume the cause and effect between two events are real.

Teachers don’t have time to evaluate impact.

Evaluating the impact of teaching on learning is a key part of your work in AfL. As part of their everyday reflective practice, effective teachers ask: Are the individuals and groups I teach learning? Are they making progress? How do I know? Could adjusting my practice mean they make greater progress? You can often use less formal methods of evaluating impact as part of normal classroom practice.

Research projects don’t need any resources.

More extensive evaluations of practice or innovations may involve extra time in planning, collecting data, analysis, and sharing findings. You and your school will need to think about what time you can allocate to this. For instance, your school might want to plan time to focus on investigations that are likely to be most worthwhile to you, your learners, and the school. It is important to make sure that a project does not create an unreasonable workload, as further resources may be needed to make sure the evaluation is of a high quality.

We should be able to see the effect of an innovation quickly if it is worthwhile.

While some innovations should result in relatively quick outcomes (for example, whether a new seating plan encourages pupils to be more involved could be evaluated after a few lessons), others are complex and take time to put in place and for results to become visible. For instance, AfL techniques may take you and your learners a while to accept and, as a result, take time to become an effective part of classroom practice. In this second example, you would need to evaluate the impact on learner outcomes over an extended period. Also, sometimes a new method can look as if it is doing well in the short term, because students are enjoying the innovation, but once the novelty wears off it becomes clear there is not a major effect. The important thing is to give yourself and the intervention enough time for it to be fully in place before judging its impact.

Small-scale teacher impact evaluation is too small to tell us anything useful.

While there is a role for projects which collect and combine the findings of small-scale studies, they do not have to be generalised to be useful. Small-scale studies may be very useful for you and your class and the school in which they are produced. We need to be careful that we aren’t scared of starting to evaluate the impact because we aren’t able to produce a large-scale study.

All data is numerical.

Data can be numerical (quantitative) but it can also be non-numerical, such as free responses in a survey, or the results of interviews (qualitative). Albert Einstein is supposed to have said, “Not everything that can be counted counts, and not everything that counts can be counted.” We need to think carefully about what is the right way to collect data for our study. Should we be using quantitative methods, or is a qualitative approach better? Or should we be using a mixture? Sometimes numbers might have a role, but they might not be the most useful thing to tell us about impact. For example, it is hard to properly evaluate students’ wellbeing using just numerical data, even though things like attendance data might be a useful indicator. We might also want to use other data, such as records of discussions with students and parents.

If there’s published evidence that an innovation has had an impact, it must be good.

First, it’s important to ask where it’s been published and the level of quality control. Material in peer-referenced international journals will have been through a strict quality-control process. Something an individual has published on their own website may still be excellent but may not have gone through such a thorough checking process. Whatever the source, you should always take a critical approach to the content. Consider carefully the context in which the research was produced compared with your own situation, and reflect on whether the conclusions would work for your learners. Ideally, you should use evidence from different sources and weigh it up carefully before deciding to try something, particularly if this would involve time and resources.

What works for one class or teacher must work for everyone.

This isn’t always the case as schools are very varied. Something that works in one cultural setting may not work the same way in another. Something that is effective with younger students may not appeal to older ones. The Friday afternoon class might not react the same way as the Wednesday morning one. Learners’ previous experiences and even teachers’ personalities and approaches are different. While trying out new ideas that have worked for someone else is nearly always a good idea, you may not always get the same impact as someone in another classroom. This does not always mean that you should stop straight away. It’s often worth reflecting carefully to see if you can adjust the approach for your own learners, or try it with a different group of students.

The only data that matters is the final examination grade.

Examination results are of course important for schools, learners and their families as they can tell us a lot. However, lots of other data is also important. We might want to look at learners’ performance in mock examinations or in class assessments. We might want to look at their attainment and also their achievement, which focuses on the progress that they have made. There might be other data too, such as attendance or behaviour data. We might want to look at qualitative data taken from, for instance, interviews with students, families or teachers.

Impact can only be positive.

Impact may also be neutral or negative. A tactic or initiative might result in learners’ progress slowing down, rather than speeding up. The innovation may also have ‘side effects’ − by focusing on one element of teaching and learning, another may be neglected or removed. For example, evaluating an intervention may show a rise in attainment, but learners’ enjoyment of the subject may go down, which in turn may affect their attendance or involvement in those lessons. This idea of side effects is discussed in detail by Yong Zhao (2017) in his review of educational research in America. It’s important to reflect broadly on the effect of the innovation on different groups and to avoid too narrow a definition of success.

In the video below, Dr Sue Brindley says teachers tend to think they must collect lots of data to use in their evaluation of impact, but this is not true. What data will help with your evaluation? Does it already exist? If it doesn't, how will you collect it?

Ruchira did not think her students knew which techniques were most effective when revising for their history exams. She decided to try to equip her students with a range of effective revision techniques they could use at home. She began modelling techniques such as mind maps, flashcards and preparing summaries in lessons, explaining what these techniques were useful for and how best to use them. She then set these for homework. The impact was a noticeable difference in what her students were saying about their revision, as they could say which techniques they used and what they used them for. When marking their books she noticed over half of her class were using the techniques to revise topics on top of what she had set for homework.

Evaluating impact in practice

Remember, you need to know where things are right now in order to evaluate what change has taken place. You might already have this baseline data. However, if you don’t, you need to think about how you are going to collect it. Baseline data is important as it makes sure you base your starting point on evidence rather than assumptions. For example, poor behaviour in lessons may mask students who have the potential to achieve highly. If you are not aware of their previous attainment, your evaluation of impact may not be accurate.

In your everyday practice, AfL techniques are a key way of finding out about the impact of your teaching on students’ learning. Effective teachers use these techniques both regularly and frequently.

You might also want to try gathering different types of data, which you can then use to evaluate impact. When collecting data, it’s important to think about how the data will help you with what you want to find out about. You will need to think about how you will record your data, whether you will be the person asking the questions or handing out the questionnaire (if you are asking students about your teaching, will they be truthful?), how you will store your data and how you will share it.

We outline some of the main data-collection methods in this handout. Bear in mind this is not a full list. Make sure you know the benefits and pitfalls of each method before you use it (see the reading list for references).

"…when it comes to choosing a method, researchers should base their decision on the criterion of ‘usefulness’. Rather than trying to look for a method that is superior to all others in any absolute sense, they should look for the most appropriate method in practice."

We are always on the lookout for different pieces of evidence to evaluate for proof of impact. For a more thorough evaluation, it helps if we combine different sources of data collection to find out about the same situation or issue. This is called triangulation (see the diagram below). For instance, we might look at whether student marks have risen after a particular intervention. But we might also do some carefully planned ‘student voice’ work (for example, a short questionnaire given to students asking for their opinion on the intervention), or see if their attendance has improved.

Triangulation

Tiangle of

We can triangulate more informally too. For instance, if we think that our AfL techniques suggest that some students have struggled in a particular essay, we might wonder if this is due to the topic they are studying or their essay-writing skills. So we might do a factual recall test, and also look at how they have done on essays on other topics. Or we might check with their pastoral tutor whether they have had some issues outside of school which are distracting them.

When you are collecting data to evaluate the impact of your practice, you should also think about ethical considerations. Some data is naturally produced in the classroom as part of your day-to-day teaching, but you may need to make special arrangements for other data-collection methods such as interviews or video-recording. In some settings, you may need permission from parents and learners for these. It is also important to explain to the learners what you are doing and why. Many young people will relish the opportunity to be included in research and are keen to have their voices heard. Some projects can even involve young people as researchers. You may need specific permission to share findings or examples of data with a wider audience. Make sure you consult the appropriate person in your school for guidance when planning an evaluation of impact.

Remember, don’t feel you have to do the perfect study. It’s important to demonstrate good practice and you will want your findings to be as representative of the truth as possible, but don’t make the mistake of doing nothing and being unsure of the difference your teaching or an intervention makes because you don’t think your study is going to be perfect.

Maria wanted to improve the punctuality of her A-level students, making sure they were at lessons. She decided to ask any students who turned up late to the class to wait outside until an appropriate time to enter so as not to disrupt the other students. By tracking the attendance data of her A-level classes, over a short time Maria saw a significant reduction in the number of students turning up late to her lessons.

Evaluating impact checklist

If you are new to evaluating impact, it will help to work through the following points.

1. What do you want to investigate and why?

Make sure that you are clear about your aims. Why do you want to focus on this topic at the moment? Perhaps it arises from an issue you’ve noticed in teaching and learning, or a strategy that is being introduced in your school. Perhaps it’s something you’ve heard about from another school, seen at a training event, or read about in the literature. Don’t take on too much, especially if it’s the first time you’ve tried this approach.

2. How does this fit into the bigger picture of your faculty or school?

Consider how this is part of wider priorities. Does it fit with your school’s development plan? Discuss your ideas with your line manager or senior leadership team. What suggestions or support can they give? Is there another colleague or small group you could team up with to share planning and findings?

3. What can you read to support your enquiry?

How will you know this reading is relevant and of a good quality? Aim to find out more about the education topic and evaluation methods. Professional literature in your subject might be helpful, or the reading list in this guide could provide a starting point on methods. Your colleagues may also be able to recommend material. Remember to always engage critically with reading. Ask who produced it, why, where and when? Was the context similar or different to your own school?

4. What is the impact of and on?

Try to be precise about this. What exactly are you evaluating? A new teaching approach or technique? (Impact of what?) Are you looking for impact on learning, attainment, achievement or on other aspects of education such as wellbeing or enjoyment? (Impact on what?)

5. What will you do?

Plan what you will try out and when. Will you focus on a number of students within a class, a whole class or more than one class? How will you decide? Sometimes our choices are limited (perhaps you only teach one A-level class) and at other times we have more choice. Often it’s helpful to try things out with a range of students who have different past attainment levels, ages, gender and so on. Remember not to take on too much. It’s easy to underestimate the time it takes to look through data, so make sure your plans are manageable.

6. How will you collect data?

Will more than one method be helpful? Remember that the method you use must be appropriate for the aim of your study. A good question to ask yourself is, does the data already exist? You may have information in your mark book, attendance registers or recordings from student discussions that you could use. When evaluating impact in schools, much of the data is often produced naturally in the teaching process (for example, student work or spoken responses) and you just have to think about how to record it. You may like to add methods such as questionnaires, but make sure that you can manage your plans in the time available and that they produce information that is useful for your study.

7. How will you analyse your data?

Often this is just a matter of photocopying, reading, annotating and collating. Look out for patterns and themes that you can track across other data you have collected. When methods produce numerical data (such as test scores), statistical techniques like calculating the effect size can be helpful.

8. How do I know whether my intervention was successful?

Think about what your success criteria is. What would you hope to see as a result of your intervention? What difference do you want to see in relation to your baseline data? For example, your success criteria from an intervention to improve student wellbeing may include higher student attendance compared with the previous year.

Also, make sure you have given your intervention enough time to show whether or not it was successful. It may be that students enjoy a new intervention aimed at developing the correct use of key terminology in their written work when they first use it, but over time this initial enthusiasm falls and your findings show less of an improvement.

You may also want to think about evaluating success in stages. If you are introducing an intervention throughout the whole school, it would be a good idea to try it out with a few teachers or students first and evaluate its success before rolling it out to the whole school, and then carry out a further evaluation.

Finally, don’t worry if it wasn’t successful! If your evaluation showed that a particular intervention had less of an impact than you hoped or that it didn’t work, that’s fine. This is still important information to share with colleagues to help with future planning.

Next Steps

These are some ideas to think about to get you started!

- How could you improve how you use AfL strategies? Do you use, for example, exit passes to help you to evaluate the impact of your teaching on all pupil’s learning after a lesson? Could you use, for example, hinge-point questions when evaluating the impact of your teaching on all pupils’ learning? How could you evaluate other aspects of learners’ school experience? Do you use more than one source of data (for example, observation, analysis of work, learner feedback), as appropriate?

- Start reading up on a topic that interests you. If you’re not sure where to start, Hattie (2012) or Coe et al (2014) cover a range of teaching and learning issues. Perhaps you could meet with a reading partner or start a reading group in your school to discuss what you’ve learned? How will this affect your practice? What issues might be raised that you could explore?

- Think about an intervention you have already done. Try to evaluate its impact by reflecting on any changes you noticed in your learners. You may already have collected data you can analyse that will help you with your evaluation.

- Think about what issue or intervention you want to assess the impact of. Ask yourself: Why is this important? What questions do you want to find answers to? What is the success criteria for this evaluation? Who will you try the intervention on? How and when will you do this? What data will you collect? How will you analyse your findings? How will you share your findings and who will you share them with? How will the results of your evaluation influence your practice and development priorities?

- Try planning a practitioner enquiry based on your own situation and using the questions above. The focus of the enquiry could arise from a challenge in the classroom or a review of your strengths and areas of your teaching you could develop. The Cambridge Teacher Standards might be a good starting point for review.

Want to know more? Here is a printable list of interesting books and articles on the topics we have looked at.

Glossary

Achievement

A learner’s progress.

Action research

How teachers research their own practice through a series of linked enquiries.

Assessment for learning (AfL)

Teaching strategies that help teachers and students evaluate progress, providing guidance and feedback for subsequent teaching and learning.

Attainment

The level reached in an assessment.

Case study research

A research approach to investigating an issue, event or situation in depth in a particular context.

Collaborative professional enquiry

Teachers work together to identify challenges and develop solutions through collecting and analysing data.

Control group

A group that matches the characteristics of the intervention group in as many ways as possible but does not receive the intervention. The control group data is then used as a comparison.

Critical realist evaluation

An evaluative approach to understanding how change happens in order to show the complex associations between an intervention and outcomes. Key questions to consider include: How does it work? Who does it work for? Under what circumstances?

Data

Results from quantitative or qualitative methods.

Effect size

A number showing the size of any difference between two data sets, taking into account the spread of the data.

Generalisability

The extent to which findings from a research study might apply across other schools.

Hinge-point question

A question designed to generate evidence of understanding as a part of a single lesson and/or learning progression. The answers given by students determine if they are ready to move on, or change task or go back a step.

Impact

The effect that one thing has on another thing.

Learning

A change in learners’ thinking about a topic in a desired direction, gaining skills and values.

Lesson evaluation

Reflecting back on a lesson to consider how far individuals and groups achieved your aims for the lesson, and whether you should do anything differently another time.

Lesson study

A joint approach for teachers to improve teaching and learning, developed in Japan.

Logic model

A representation of the elements and expected short- and long-term outcomes of an innovation, used in planning and evaluation.

Meta-analysis

The statistical analysis of findings from a selection of research studies.

Practitioner enquiry

How teachers investigate their own practice with the aim of improving learner outcomes.

Qualitative data

Word-based or visual material such as spoken responses, interview transcripts or drawings.

Quantitative data

Results of numerical measurements such as test scores.

Randomised controlled trials (RCTs)

Experiments in which those taking part are randomly assigned to two groups, one of which receives the intervention and the other does not.

Reflection

A systematic review which allows you to make links from one experience to the next, making sure your students make as much progress as possible.

Reflective practice

A way of gaining new insights into our practice through learning from experience.

Reliability

The extent to which the same results would be achieved if the study was repeated

Statistically significant

The probability of a result being due to some systematic difference or trend in the data that would indicate a real finding, rather than being due to chance or some random factor in the data.

Student voice

A form of pupil consultation where students share their opinions, views and thoughts on their learning or their school. The school can use this information to shape thinking about their students and how they learn, but also to inform the school improvement process more broadly.

Systematic reviews of research

Reviews of carefully selected research studies that bring together evidence to answer pre-set questions.

Triangulation

Collecting data from more than one source to gain a fuller understanding.

Validity

The extent to which a data-collection method measures what you want it to measure.